Image-guided interventional systems have to respect a number of critical elements and find the optimal balance between computational accuracy, integration in complex environments in the operating room or intervention suite and real-time interactions for timely information feedback. This second research thrust aims to develop innovative solutions for intra-operative shape tracking and real-time rigid or deformable image registration. We focus on groundbreaking work in therapeutic drug delivery and minimally invasive interventions to find appropriate tracking technologies which facilitates translation in the interventional workflow. This includes topics such as automated image-based multimodal fusion and ultrasound needle detection or catheter localization.

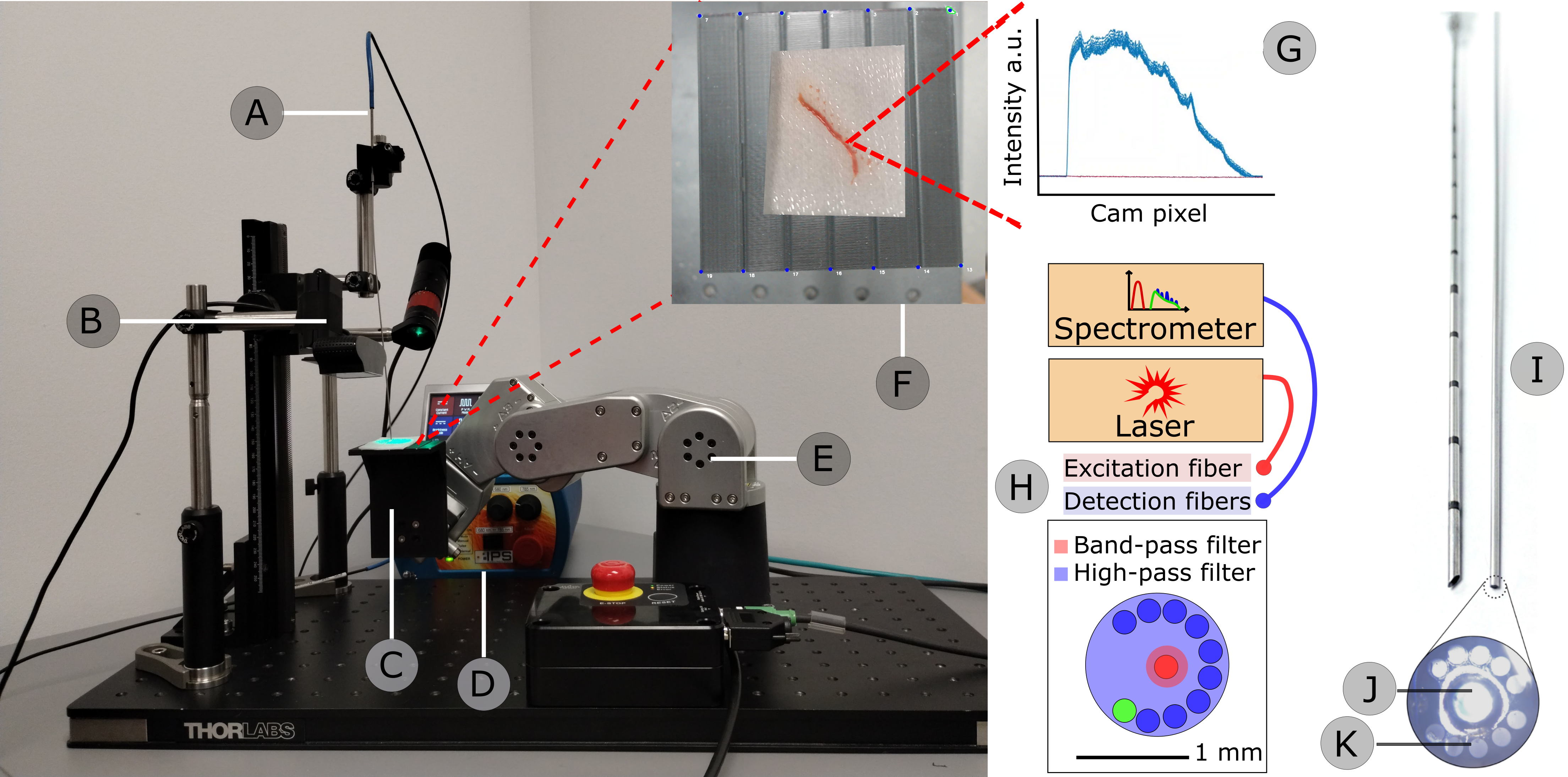

Intraoperative cancer confirmation using Raman spectroscopy

Winter 2023: David Grajales (Doctorate)

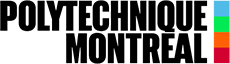

Cancer confirmation in the operating room (OR) is crucial to improve local control in cancer therapies. Histopathological analysis remains the gold standard, but there is a lack of real-time in-situ cancer confirmation to support margin confirmation or remnant tissue. Raman spectroscopy (RS), as a label-free optical technique, has proven its power in cancer detection and, when integrated into a robotic assistance system, can positively impact the efficiency of procedures and the quality of life of patients, avoiding potential recurrence.

Methods: A workflow is proposed where a 6 DOF robotic system (optical camera + MECA500 robotic arm) assists the characterization of fresh tissue samples using RS. Three calibration methods are compared for the robot, and the temporal efficiency is compared with standard hand-held analysis. For healthy/cancerous tissue discrimination, a 1D-convolutional neural network is proposed and tested on three ex-vivo datasets (brain, breast, and prostate) containing processed RS and histopathology ground truth.

Submission: MICCAI 2023

Prostate cancer is a predominant form of cancer in men and is still responsible for many deaths every year. Fortunately, exhaustive screening processes put forward by healthcare authorities allows for early diagnosis and multiple treatments are available. One such treatment, high dose rate (HDR) brachytherapy, involves inserting about 15 catheters in the prostate through the perineum, the region between the anus and scrotum, under transrectal ultrasound (TRUS) guidance. These catheters are then used to sequentially introduce an extremely radioactive source in the gland following a plan also devised using intraoperative TRUS imaging. Unfortunately, because the low ultrasound contrast does not allow delineation of affected tissues, this technique is inappropriate for focal tumours-targeted treatments. Consequently, the prostate is usually uniformly irradiated without sparing healthy tissues.

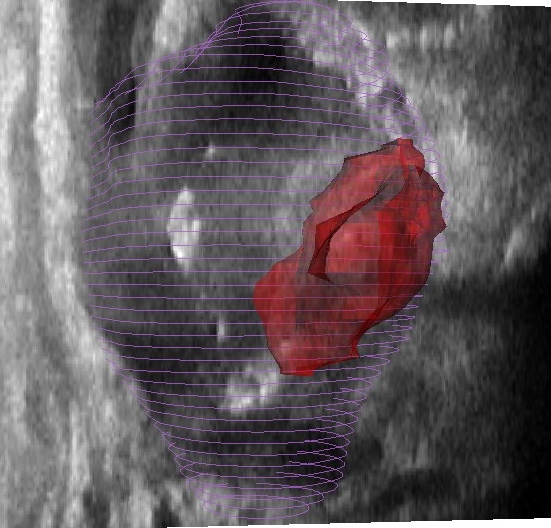

In spite of that, tumours can be easily delineated on magnetic resonance (MR) imaging which is normally acquired for diagnosis and preoperative planning purposes. Registration of these MR images to intraoperative TRUS would allow for more flexible treatment planning including focal tumour-targeted treatment and selective dose boosting of affected tissues. Several multimodality registration techniques already exist, but their application to prostate brachytherapy is challenging. This is in part due to the poor TRUS contrast, artefacts caused by catheters and deformations caused by the TRUS probe. This project aims to leverage recent developments in the fields of deep learning and particularly of fully convolutional neural networks to tackle these issues.

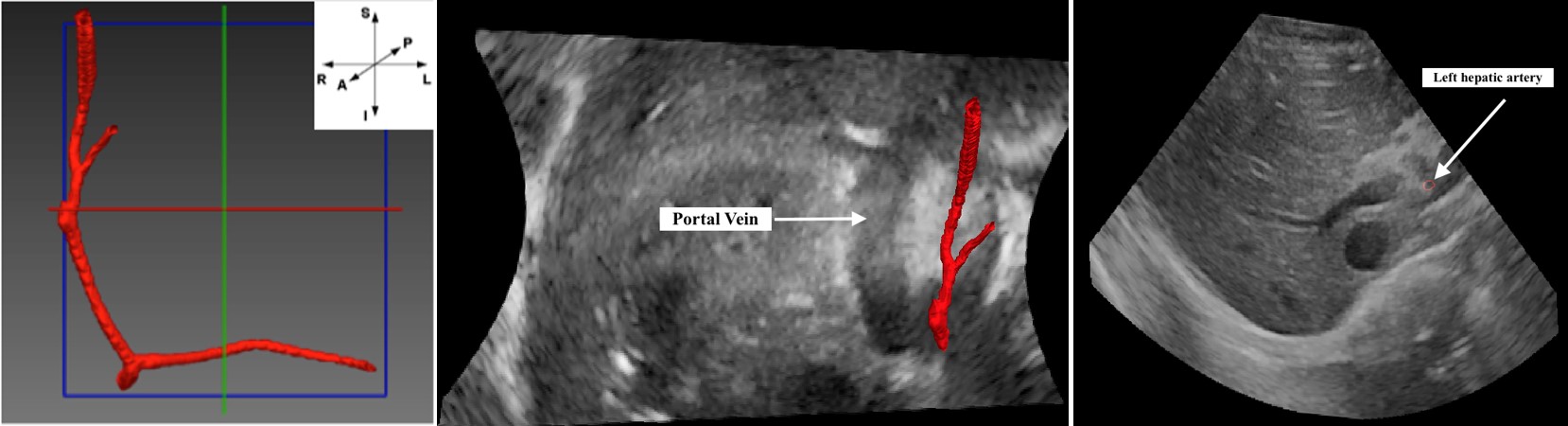

Tumor embolization by intra-arterial approaches is the current standard of care for advanced cases of primary liver cancer. Maximization of the tumor’s exposure to the chemotherapy is one of the main optimization schemes for these therapies. In this context, modulating the drug injection-rate with respect to the blood velocities in the feeding arteries might help maximize drug infusion into the tumorous tissues. While Doppler ultrasound is a well-documented technique for measuring the blood flow velocities, 3D visualization of the vascular anatomy with ultrasound (US) remains challenging. We propose a pipeline that aims at facilitating the Doppler measurements by enabling the easy visualization of the hepatic arterial branches within a 3D ultrasound image of the liver. A 3D mesh model of the hepatic arteries is created by automatically segmenting the vessels from a 3D MRA image. A non rigid image-based automatic registration routine is then applied between the MRA and the US images in order to map the 3D model of the arteries in the ultrasound volume and allow for their easy visualization using ultrasound.

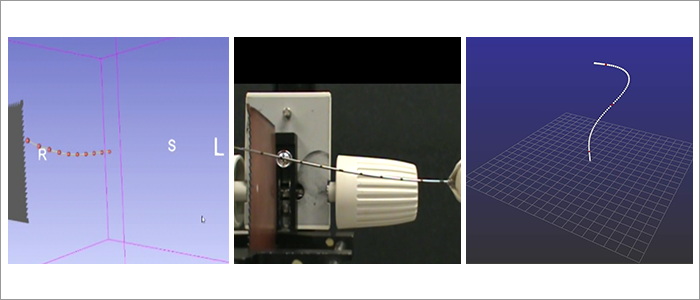

Accurate estimation of the position or shape of surgical equipment inside the human body is essential in minimally invasive surgery (MIS), for example, in endoscopic surgery. Typically, this procedure is done by ultrasound (US) image guidance, based on tumor locations identified by computed tomography (CT) and/or magnetic resonance (MR) imaging. These interventions bring different modalities together using multimodal image fusion and tracking technologies to follow the shape and tip position of the catheter makes the whole system bulky and expensive. Moreover, integration of large image sensors in charge coupled device (CCD) cameras for endoscopy increases the risk of damaging body tissues. As an alternative, miniaturized optical sensors especially fiber bragg grating (FBG) sensors, are capable of measuring axial strain in submicron accuracy, can be embedded in surgical equipment to realize reliable read-out and reconstruction of the high-curvature bending.

This project aims to determine the accuracy with which the shape and tip position of the catheter can be estimated based on FBG measurements. To this end, a series of FBGs are integrated into three fibers, which are glued together in 120 degree orientation and then inserted into a cannula. Besides, vessel segmentation from MR image, as well as multimodal image registration between preoperative MR and intra-operative US image, will also be investigated in this project.

During vascular interventions using devices such as catheters, 3D real-time visualization of catheters may be of clinical value for the localization within heart chambers. This is typically performed using fluoroscopic imaging with contrast agent. However, these systems only provide images in two dimensions and it remains challenging to obtain a precise 3D representation with a sequence of images. The procedure time can become very long and exposure to this type of radiation can be harmful for patients. Here, we propose a new alternative using several fiber Bragg gratings (FBG) based sensors to reconstruct the three-dimensional shape of catheters. Typically, an n+1-th order polynomial approximation fitting the number of sensors integrated within the catheter, is used to monitor the position and shape of the entire catheter in real-time. Preliminary results showed that the method could lead to cheaper and safer devices than MRI or ultrasound while maintaining a very similar accuracy. Toward this project, many efforts are also spent to minimize the temperature dependencies on the FBGs, to optimize the number of sensors as well as their positions along the catheter.

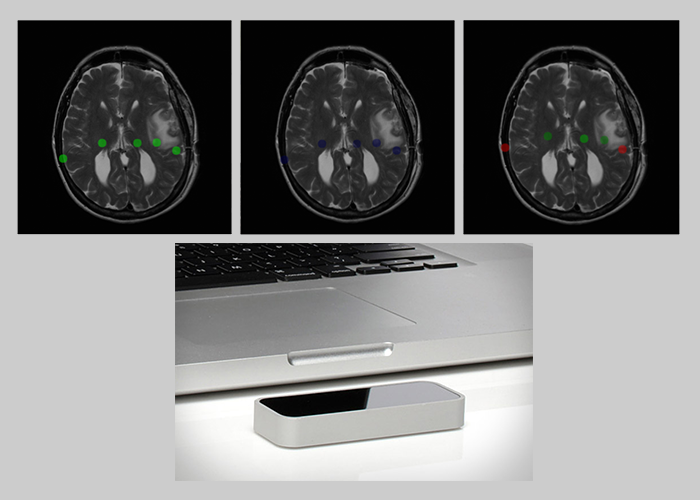

Interventional radiology made the use of medical imaging by physicians a commonplace in hospital. However, navigating through medical scans is not very intuitive because of the lack of control of current devices. The objective of this project is to develop a new system to navigate through medical scans by gesture recognition, i.e. without any contact with the device in order to protect the sterility of the operating or autopsy room. Usability and performance of such systems is critical for its adoption among the medical community. The Leap Motion controller offers a high-resolution for detecting fingers gesture, which avoid large movements of upper members as for the Kinect; a small size; a low-price and a strong developers community. A first prototype has been developed and can be found in this repository.

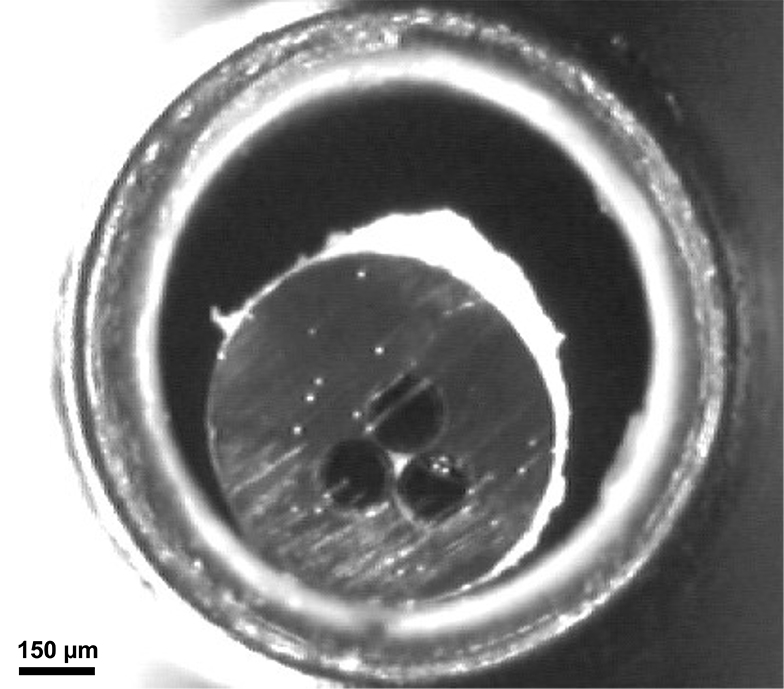

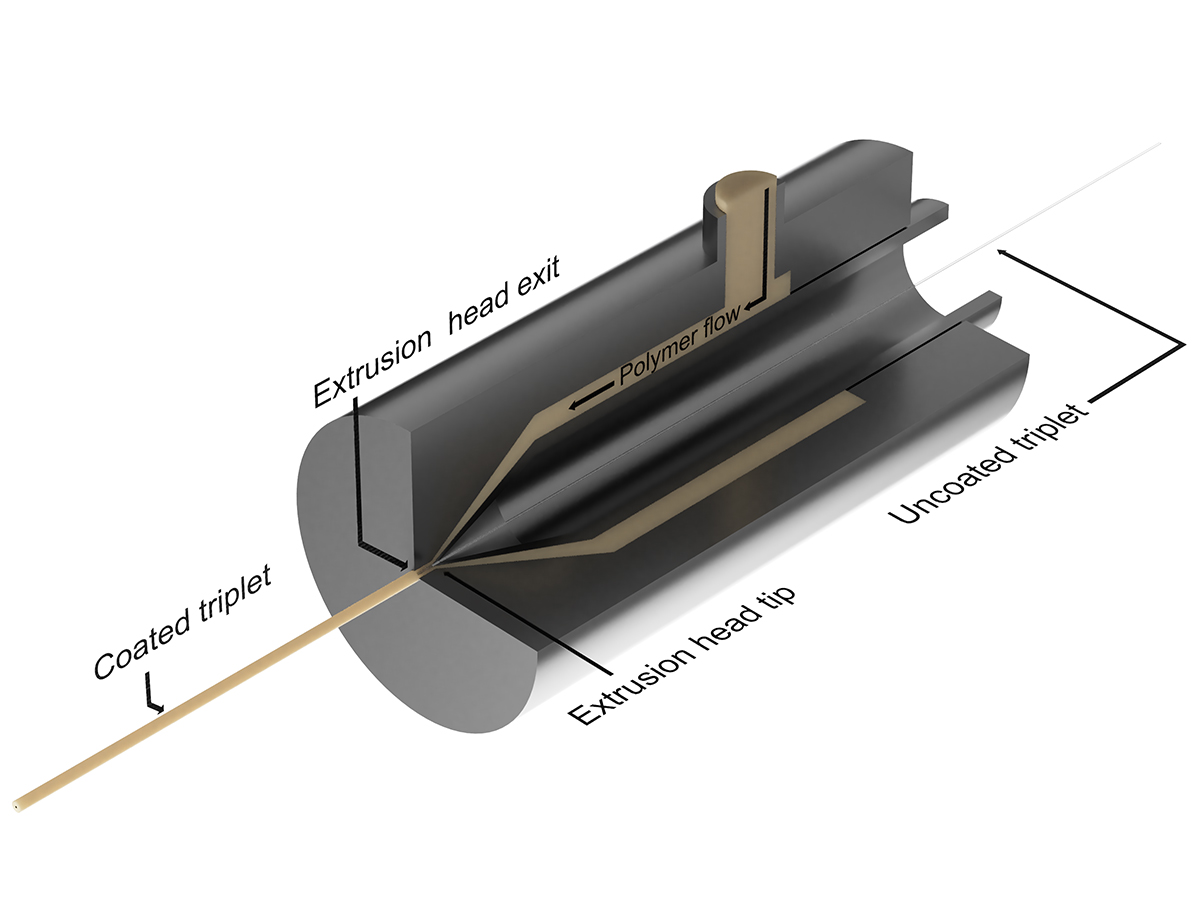

This project explores the possibility of using the extrusion process in order to manufacture a new type of optical fiber based biomedical sensors. With the extrusion method, any number of fibers can be integrated in the sensor to fulfill different requirements. The extrusion process is known for its reliability and enables the manufacture of any length of sensor without reproducibility issues. The emphasis is placed on shape sensing of needles and catheters for minimally invasive surgery. Shape sensing is performed using the enhanced backscatter signal of our ROGUEs (random optical fiber gratings written by UV or ultrafast laser exposure).

This project explores the possibility of using the extrusion process in order to manufacture a new type of optical fiber based biomedical sensors. With the extrusion method, any number of fibers can be integrated in the sensor to fulfill different requirements. The extrusion process is known for its reliability and enables the manufacture of any length of sensor without reproducibility issues. The emphasis is placed on shape sensing of needles and catheters for minimally invasive surgery. Shape sensing is performed using the enhanced backscatter signal of our ROGUEs (random optical fiber gratings written by UV or ultrafast laser exposure).

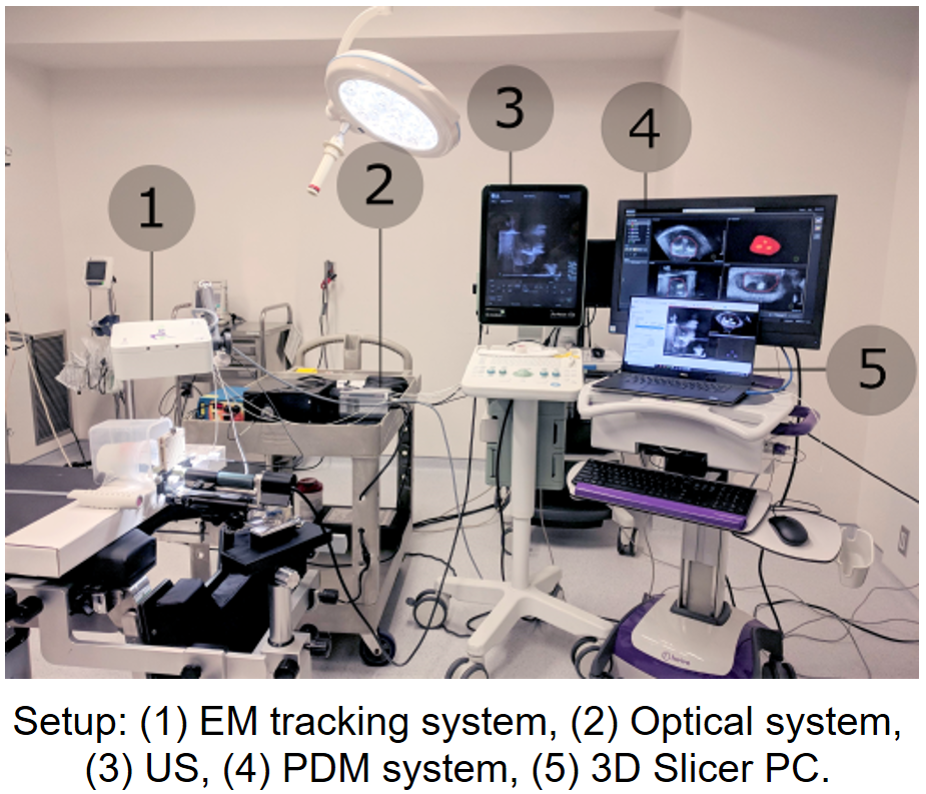

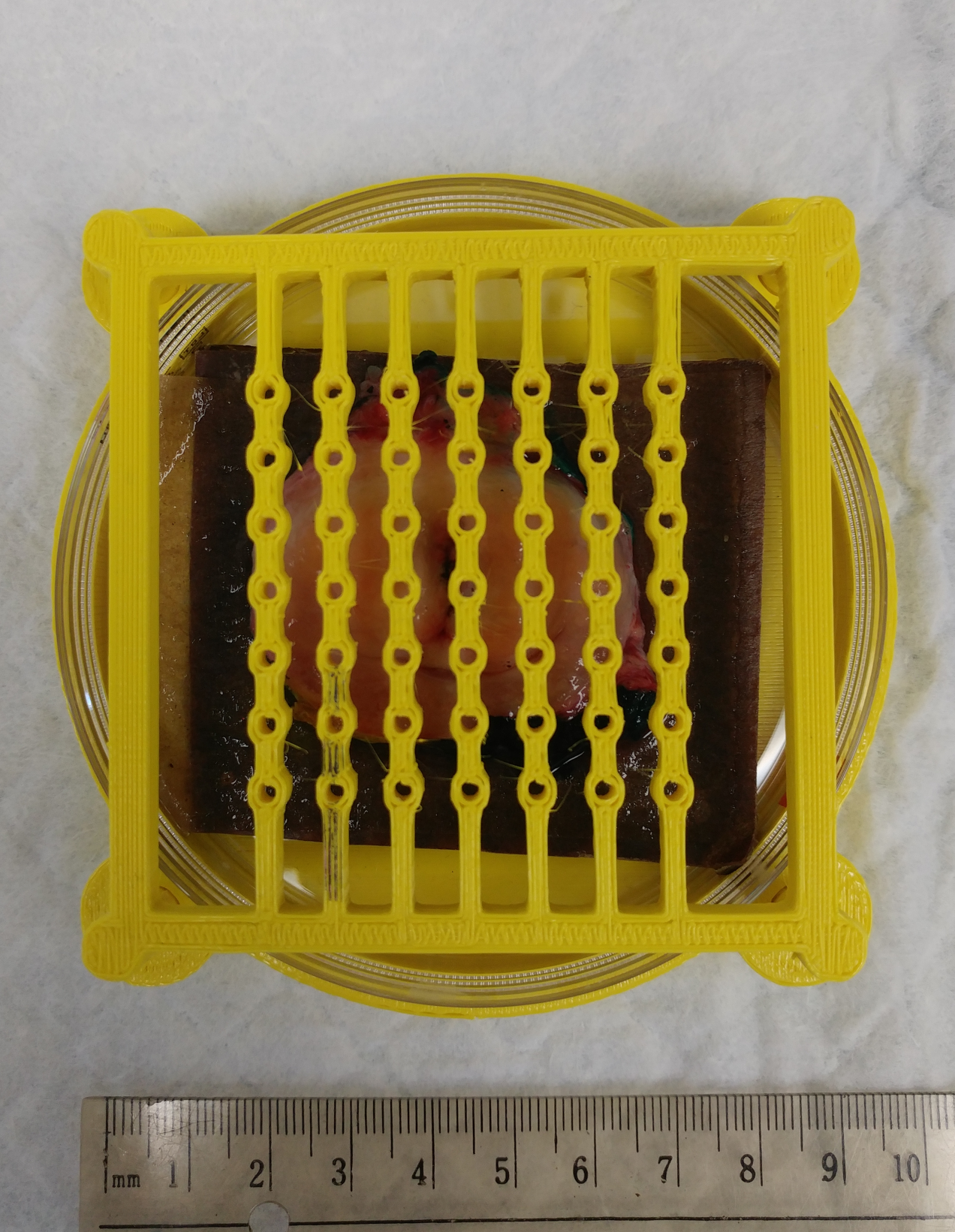

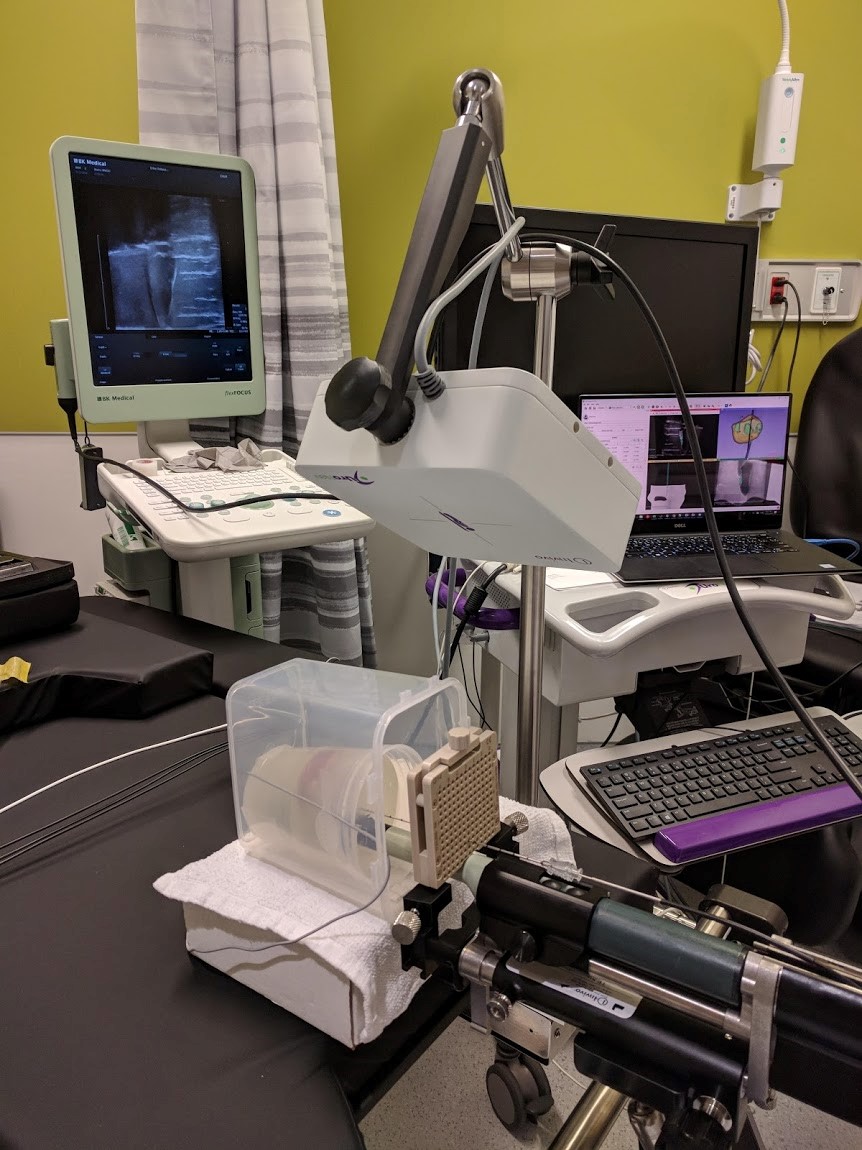

In the US, prostate cancer represents 21% of new cases and 8% of estimated cancer deaths. The kind and grade of the tumor are critical information to determine the strategy of treatment. However the prostate biopsy procedure, the standard of care to establish the diagnosis, suffers from a limited accuracy resulting in false-negative biopsies in up to 30% of patients. In this study we present an optical integrated device for prostate biopsy procedure guidance including a Raman imaging technique which has already demonstrated its potential for cancer diagnosis in clinical environment. The design includes a multi-modal imaging system based on diffuse reflectance, fluorescence and Raman spectroscopy. It also provides a navigation platform based on magnetic resonnance imaging (MRI) fused with 3D live ultrasound (US) using electromagnetic tracking (EM). The system is first validated with both synthetic phantom and ex vivo tissue experiments. Furthermore a methodology is proposed to build a large scale statistical model to discriminate cancer vs normal tissue and test it in situ on 30 human patients.

In the US, prostate cancer represents 21% of new cases and 8% of estimated cancer deaths. The kind and grade of the tumor are critical information to determine the strategy of treatment. However the prostate biopsy procedure, the standard of care to establish the diagnosis, suffers from a limited accuracy resulting in false-negative biopsies in up to 30% of patients. In this study we present an optical integrated device for prostate biopsy procedure guidance including a Raman imaging technique which has already demonstrated its potential for cancer diagnosis in clinical environment. The design includes a multi-modal imaging system based on diffuse reflectance, fluorescence and Raman spectroscopy. It also provides a navigation platform based on magnetic resonnance imaging (MRI) fused with 3D live ultrasound (US) using electromagnetic tracking (EM). The system is first validated with both synthetic phantom and ex vivo tissue experiments. Furthermore a methodology is proposed to build a large scale statistical model to discriminate cancer vs normal tissue and test it in situ on 30 human patients.

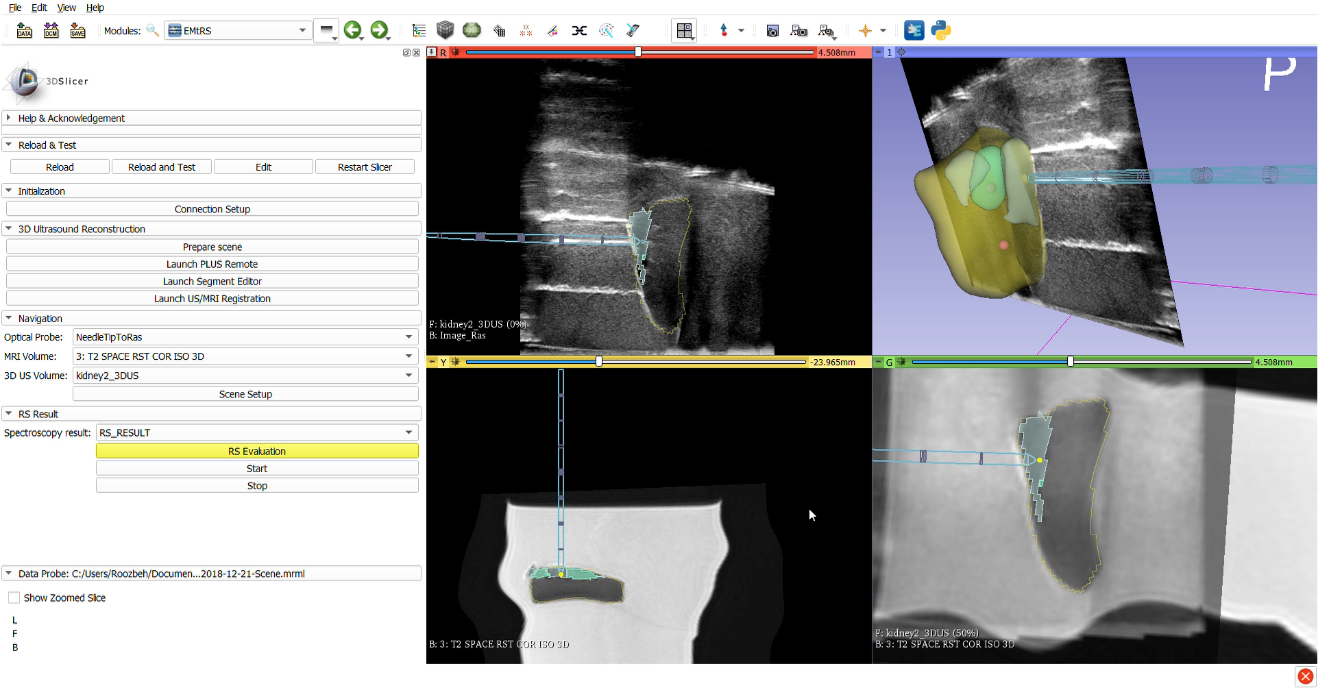

The standard of care for prostate cancer (PCa) biopsies and therapies is based on intra-operative TRUS-guided procedures, but this leads to false-negative rates which can go up to 35%. A promising approach to reduce negative biopsy rates is to take advantage a highly sensitive and specific optical properties of prostate tissue. Its purpose would be then to provide the urologist or surgeon with molecular information in situ prior to sample collection. Provided in real-time during the biopsy needle insertion, this new data collection may result in a more accurate tumor targeting and therefore, decreasing the false-negative rate. Raman spectroscopy (RS) is an optical technology which demonstrated its ability to discriminate malignant from normal tissue and was successfully performed in vivo during brain tumor resections. In this project, we aim to design and build a navigation system for RS that can be used in situ prior to tissue extraction to confirm the presence of PCa. This system will use Electromagnetic tracking and MRI/Ultrasound fusion to navigate to the target and RS to detect cancerous tissue.

Biopsy is the standard of care for confirmation of prostate cancer (PCa) diagnosis, even though its high false-negative rates and the needed time to get the histopathological results. Using the optical properties of the tissue, Raman spectroscopy has great potential for in-vivo/real-time cancer detection. The goal of this project is to develop a navigation software including pre-operative MRI, intra-operative transrectal ultrasound (TRUS) and electromagnetic (EM) tracking, and to conduct a pilot clinical study (feasibility study) of the optical probe for PCa detection and grading.