Need to add general description

Liver cancer detection and segmentation using recent advances in deep learning (Michał Drożdżal, Ph.D. and Eugene Vorontsov)

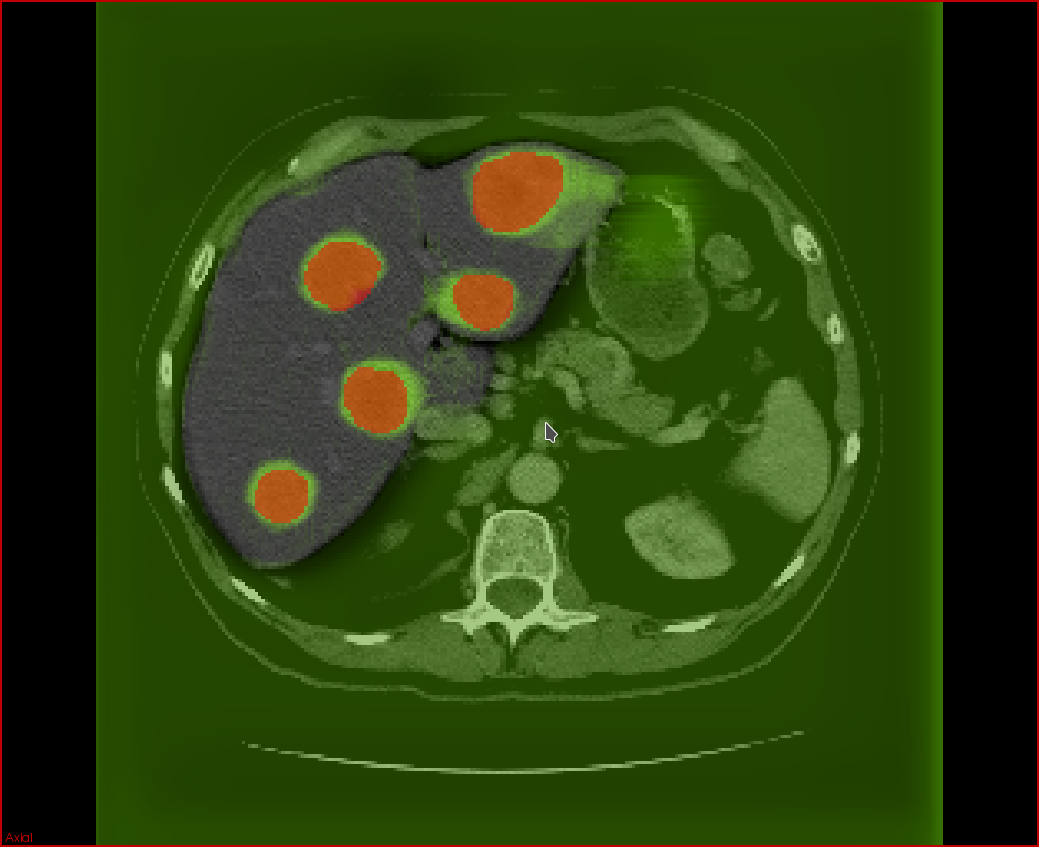

Secondary liver tumors (metastases), in aggragate with primary liver tumors (hepatocellular carcinoma), are the most common cause of cancer-related mortality in North America. Currently, there is insufficient automation in the processing of 3D tumor scans from imaging modalities such as computed tomography (CT) and magnetic resonance imaging (MRI). In particular, there is much room for the development of automated tissue classification, lesion detection, and lesion and organ segmentation. In the context of cancer diagnosis, such tools are useful for the improvement of computer-aided diagnoses (CADx) of cancer tumors.

The aim of the project is to help radiologists reduce the rate of false negatives as well as the time required to offer an accurate diagnosis and follow-up. The goal is to develop the tools based on deep learning techniques for a cancer CADx software that can be seamlessly integrated within an existing PACS (Picture Archiving Communication System) and RIS (Radiology Information System).

Automatic segmentation of lesions will allow accurate estimation of tumor volume and perfusion, aiding in the estimation of tumor burden and in the evaluation of response criteria for solid tumors. Currently, these evaluations are obtained using 2D measurements, such as the length and width of tumors. Deep neural network models require significant amounts of data to train well-performing models which is a challenge due to the scarcity of subjects that may exhibit tumor types of interest and the difficulty in labeling large numbers of medical images for training. Using manually labeled data from clincal collaborators, we plan to develop models that will detect and segment tumors in a liver segmented from CT and MRI images. The development of these models will open the prospect for improved accuracy at reduced variability with minimal human intervention. This has the potential to expand the use of medical imaging for tumor screening, diagnosis, and followup, ultimately improving patient care.

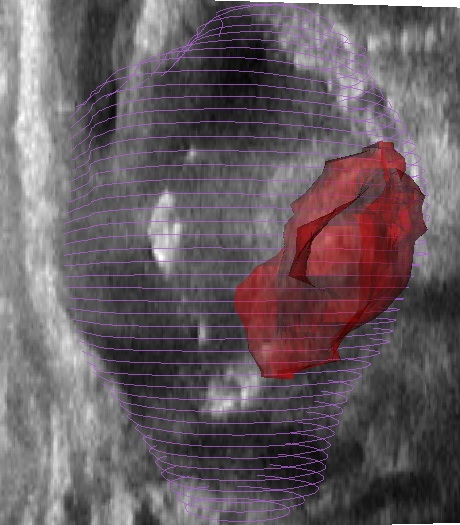

Multimodality Registration Using Deep Learning for Improved Guidance of Prostate Brachytherapy (Jean-Francois Pambrun, Ph.D.)

Prostate cancer is a predominant form of cancer in men and is still responsible for many deaths every year. Fortunately, exhaustive screening processes put forward by healthcare authorities allows for early diagnosis and multiple treatments are available. One such treatment, high dose rate (HDR) brachytherapy, involves inserting about 15 catheters in the prostate through the perineum, the region between the anus and scrotum, under transrectal ultrasound (TRUS) guidance. These catheters are then used to sequentially introduce an extremely radioactive source in the gland following a plan also devised using intraoperative TRUS imaging. Unfortunately, because the low ultrasound contrast does not allow delineation of affected tissues, this technique is inappropriate for focal tumours-targeted treatments. Consequently, the prostate is usually uniformly irradiated without sparing healthy tissues.

In spite of that, tumours can be easily delineated on magnetic resonance (MR) imaging which is normally acquired for diagnosis and preoperative planning purposes. Registration of these MR images to intraoperative TRUS would allow for more flexible treatment planning including focal tumour-targeted treatment and selective dose boosting of affected tissues. Several multimodality registration techniques already exist, but their application to prostate brachytherapy is challenging. This is in part due to the poor TRUS contrast, artefacts caused by catheters and deformations caused by the TRUS probe. This project aims to leverage recent developments in the fields of deep learning and particularly of fully convolutional neural networks to tackle these issues.